The term "integration test" is one of the most overloaded in software development. Ask five different engineers, and you'll get five different definitions. This lack of clarity creates real problems for teams trying to implement effective testing strategies, especially in continuous delivery environments.

Defining Integration Tests

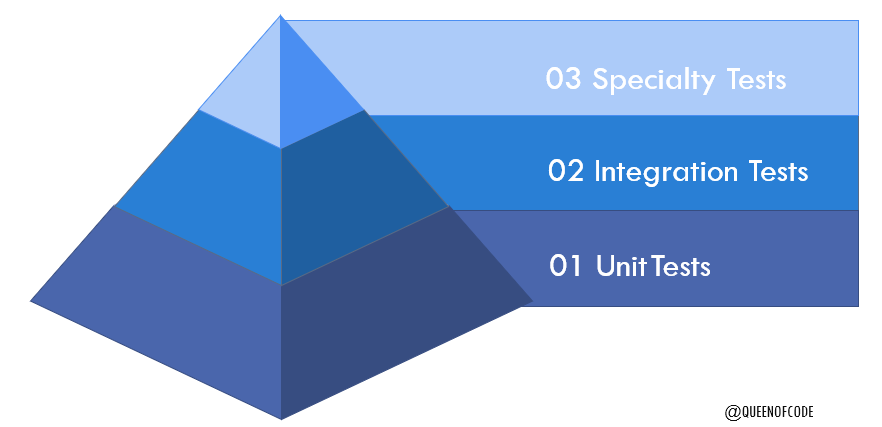

In its purest form, an integration test verifies that two or more components work correctly together. But this definition is so broad that it encompasses everything from API tests to end-to-end system tests.

More specifically, integration tests should verify:

- Component interfaces behave as expected

- Data flows correctly between components

- Error handling works across boundaries

- Non-functional requirements (performance, security) are met at integration points

Why Integration Tests Matter for Continuous Delivery

Integration tests play a critical role in continuous delivery pipelines:

- They catch issues that unit tests miss

- They provide confidence without the fragility of full end-to-end tests

- They validate real-world interactions without requiring the entire system

The key to successful continuous delivery isn't eliminating all bugs—it's catching the important ones quickly and reliably.

Common Integration Testing Pitfalls

Many teams struggle with integration testing because they:

- Make tests too broad, creating slow and flaky test suites

- Fail to isolate the integration points, leading to cascading failures

- Don't consider environment differences between test and production

- Mix different test types under the "integration test" label

Best Practices for Integration Testing

To implement effective integration testing:

- Define clear boundaries for each integration test

- Use test doubles for components outside the integration boundary

- Automate environment setup and teardown

- Run integration tests as early as possible in your pipeline

Integration tests, when implemented well, provide the perfect balance between the speed of unit tests and the confidence of end-to-end tests, making them essential for teams practicing continuous delivery.